By Nicolas Gambardella

Machine Translation (MT) is one of the most discussed topics in the world of translators at the moment (on par with collapsing fees). Most of the arguments revolve around either its usefulness or the threat it poses to the professional human translators. We briefly touched on it within a previous post, but we would like to go a bit deeper

What is MT?

Wikipedia tells us that Machine translation is a sub-field of computational linguistics that investigates the use of software to translate text or speech from one language to another (warning, this Wikipedia page is quite outdated, as evidenced by the tiny mention of the neural network-based approach). Within the world of translation, this means the automatic translation of a piece of text by software that analyzes the source, without human intervention. This is different (and complementary) from systems based on translation memories.

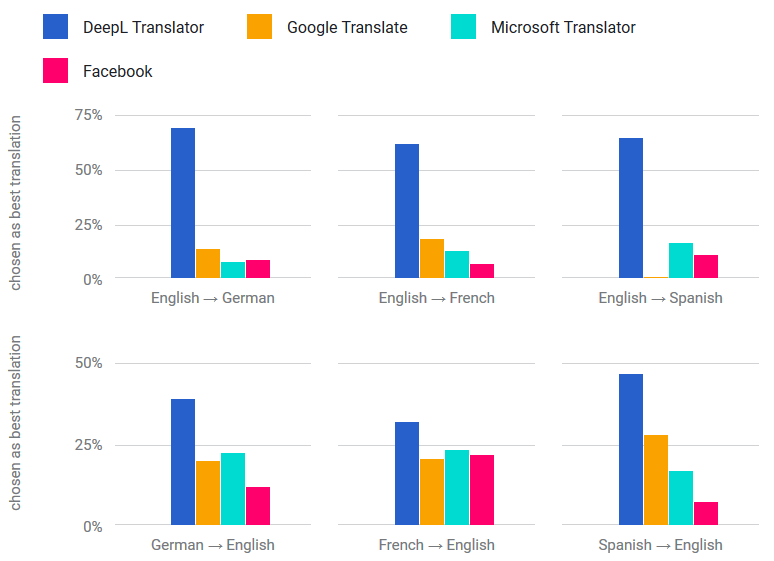

This post is not a technical essay on the inner workings of MT, and we are not going to explain how the translation is actually done. Many approaches were proposed over the years, with increasing success. However, the paradigmatic change happened – as in many other domains – when people started to use “deep learning“, i.e. using cascades of artificial neural networks trained on a huge amount of data (for more technical information you can read Google’s Neural Machine Translation (NMT) paper in arXiv). Suddenly, one could actually copy-paste an e-mail or a webpage in a translation tool and understand what it was about. Sure, the result is not perfect. Let’s be frank, it is often quite bad and sometimes funny. But it is understandable, and more or less looks like what a human with an intermediate level in a foreign language could produce when translating a text about a topic they know nothing about. And the spelling and grammar are better than many of the e-mails, text messages and Facebook posts we are all daily subjected to. The latest massive improvement came with the DeepL system, training the network using the Linguee database of existing translations.

How does the professional translation world work?

In order to understand the disruption brought by MT, it is useful to recapitulate how a large part of the professional translation is organized. There are exceptions to what we describe below, fields of translation where people interact differently, such as companies with embedded translation offices, authors dealing directly with their translators, etc. We are not concerned by these, although MT has presumably a large impact there as well. First of all, there are three different jobs involved in the production of a translated document: 1) Translation per se; 2) Editing (for which the source document is needed), where one checks that the translation is accurate, all the requirements followed (e.g. no translation of person and product names), and 3) Proofreading (for which the source document is not needed), where one checks spelling, grammar, punctuation, etc. This is the so-called TEP workflow.

Typically, when someone, the end client, is in need of a translation, they will either contact a translation company or will post a job advert on one of the many possible websites, either non-specialised – such as Upwork or Freelancer.com – or specialized in translation – such as TranslatorsBase or TranslatorCafé. The companies can be real translation companies, performing in-house translation, or agencies, outsourcing the work. In most cases though, some outsourcing will be involved since very few companies have enough employees to cover all language pairs and expertise in all fields. Such outsourcing will be done through the company’s own network of freelancers, via professional platforms such as ProZ or using the sites mentioned above. Now, sometimes, the outsourcing process does not stop here, and a cascade of subcontracting unfolds, with decreasing fees at each step of the ladder. Unfortunately, as the fees decrease, so does the quality of the translation. This is why a revision step is put in place by the outsourcers. This can be just a proofreading exercise, fixing spelling, punctuation and the occasional grammar issue. Or it can turn into a heavier editing task, correcting translation mistakes. In the case of an outsourcing cascade, this can effectively become a retranslation.

How is MT affecting the translation pipeline

Before the advent of NMT, MT produced a text so bad, that it took a professional translator longer to fix it than retranslating from scratch. A machine-translated text was also immediately obvious, even when compared to bad human translations. All that has now changed. The quality of the produced translations increased dramatically (at least in certain cases. We discuss this in the next section) and large amounts of text can be translated very very quickly. While the free online versions generally limit every single translation to a few thousand characters, one can extend that via APIs (with or without fees, see for instance the R package

This triggered two consequences, one ethical, one unethical, but both unfortunate. The first consequence is that some agencies think they can stop outsourcing the human translation part of a job and only pay for the revision one. The second consequence is that some freelancers pretend to translate themselves while they just use MT and a superficial revision. To be honest, in the latter case we are generally at the bottom of the subcontracting cascade, and the human translation would be quite bad anyway. In both cases, the result is a text that requires editing rather than proofreading. In the first case, agencies are honest and openly admit the fact, offering jobs of Machine Translation Post Editing (MTPE). But, and this is the crux of the problem, in both cases, the rate offered is at the level of proofreading rather than editing.

Improved MT also brought another change to the working practices of a professional translator. Many translators use Computer Aided Translation tools. Typically, such a tool divides the source text into segments, that are translated separately. Those tools now provide access to MT engines to provide suggestions for segment translations, as an alternative to Translation Memories (even if one could argue that DeepL is somehow linked to an uber TM, in the form of the Linguee database).

The luddites

Understandably, the world of professional translation has been shaken by the sudden rise of NMT. In a couple of years, what was seen as a promising field of research became a game-changer. The reaction in such situations is always the same. It broadly follows the Five Stages of Grief. Because of the past history of the field, most translators went through the denial period. Many are still stuck there. Using the cases where MT performs badly – albeit not worse than a casual translator not doing their homework – as evidence, such people reject its relevance entirely. A portion of the community moved on the bargaining phase (trying to avoid or compete with MT), and some are even in the acceptance phase. However, a very vocal part of the community is currently in the anger phase. In some sense, they are similar to the Luddites who refused industrialization for fear that it would suppress their jobs. However, since they cannot break the MT engines, they turn their anger towards the translators using it. They are mistaken in exactly the same way as the 19th-century Luddites. They fear that the change of paradigm will remove the need for skilled workers and replace them with unskilled cheap ones. While exactly the opposite will happen, as it did a few centuries ago when automation created highly skilled jobs and removed the lowly paid manual ones. The segment of the translation community that will be the most affected by MT is the domain of non-technical, low quality, translation, while the skills of specialised human translators will be more recognized than they were when lost is an ocean of mediocre translators. Which brings us to the strengths and weaknesses of MT.

How good is MT?

So, machine translation improved tremendously, but how good is it for practical purposes? Sure, we all came across funny translations, and we can all do with a good laugh. However, for simple texts, the result is OK.

This suggests a range of situations were MT could be used: Everyday’s discourse, children stories, factual descriptions, and news. What

Now, by contrast, MT fails with highly specialised and technical documents, when the language requires a pre-existing particular knowledge from the reader, not shared by the entire population. Why is that? Because MT cannot cope with several situations, including the following:

- When a word has several widely different meanings, and the source text does not use the most frequent one. For instance, in the ecclesiastic world, the French word “

coule ” designs a garment worn by monks. Now, MT will always believe “coule ” is a verb meaning either some liquid moving from up to down, or something thatget submerged by water, The proposed translations will be flow, run, pour, sink, cast (if what is flowing is metal or cement), stream, trickle, or even founder. It will never becowl - Not the same word or expression in different languages. Here we find the famous “il

pleut comme vache qui pisse ” translated into “it rains cats and dogs”. Same underlying meaning, totally different expression. In general, all such imaged expressions tend to be translated literally by MT, resulting in completely meaningless sentences. - Meronymy/Holonymy, that is when the word used in a language represents part of the thing which the equivalent word in another language represents. I am not talking about synecdoche here, that is a stylistic figure which uses the part for the whole or the other way around.

- Hyponymy and hypernymy, that is when a word in a language represents a generalization of the thing represented by the word in the other language. For instance, “seagull” is a layperson English word representing a subset of the family Laridae. In French, there is no such layperson term. Instead one will use either “goéland” representing the genus Larus which are big birds, or “

mouette ” representing several genera of the subfamily Larinae which are small birds. MT hasno way to know which one the author of the source text meant (even if the previous sentence clarified the issue). - Complex relationships. In English, the temporal bone of the skull is separated into parts coming from different embryological origins (the squamous, petrous and tympanic bones). In French, the temporal bone is separated into regions of the adult structure, the “écaille”, “rocher”, and “mastoid”. It is impossible to translate one into the other. One has to reconstruct the entire description.

- Context-dependent translation. MT typically focused on a word and its immediate surrounding. For instance, a human translator will understand that in the following sentences “La fille

regarda les jouets qu’on lui avait offert . Son ballon était bleu et son vélo rouge”, the ball and the bike are the girl’s ones. But MT cannotdetermine that. Both GT and DeepL translate it into: “The girl looked at the toys that had been given to her. His balloon was blue and his bike red.” (which by the way is a great example of unintended but real sexism).

I am certain there are other areas where MT performs unevenly or badly (for instance when it comes to household names, slang, etc.)

Among the other issues presented by MT are two problems that mirror each other. Since the MT engines have no memory of the entire text, the same word can be translated differently in different parts. Sometimes it does not matter, as in “stream” and “trickle” in the example above. Sometimes it does, if we get sometimes “stream” and sometimes “cowl”! Conversely, because MT engines were built on a given training set, they tend to produce texts that are boring in terms of vocabulary and “robotic” in terms of style. To be fair, this is much less of a problem with

Two ways of using MT in professional translation

At the heart of the debate and disagreement around MT in the professional translation setting lies a lack of clarity on the way it is and/or should be used. At the moment, there are two very different ways of using MT for translation:

1) using MT to perform the whole translation, and ask third parties to review the results.

2) using MT as part of a piece of the toolkit to perform translations, for instance, to provide starting points or alternatives for segments, in parallel to translation memories.

Many agencies, or publishers, think MT is ready for 1), while it is not. Let’s be really really clear here: MT is not the key to automatically – and cheaply – translate corpora of texts, either articles or books, etc.

Furthermore, reviewing translations performed that way is extremely difficult. It is by no mean a proofreading exercise, but rather an editing exercise. We had to edit large texts which comprised parts translated by MT and parts translated by a human who clearly was not a native of the target language. Both types were difficult to edit. However, there was one crucial difference: While the human-translated parts presented a horrendous style and many grammatical mistakes, the MT parts presented WRONG translations. In most cases, this is much worse. For instance, in the biomedical domain, tiny misunderstanding might lead to dreadful consequences.

Conversely, many professional translators think or claim that MT is not ready for 2), and cut themselves from a very useful tool. We wholeheartedly adopted 2). We think there is much improvement needed, and it is possible (see below). We believe translators, like any professionals, need to take control of their tools. When a farmer works out their field, they use various technologies. But one rarely sees some third parties, completely unaware of what was done to the ground and how it was done, coming and evaluating the work. They just buy the product. We think MT should be used by translators, not blindly, but in a controlled manner. Then, we will be able to learn from it, but also to help it grow to become an even more useful tool.

How to use MT efficiently

- Use MT on a segment per segment basis rather than for the whole text (the definition of what makes a segment is let to the imagination or the preferences of the reader/translator).

- Never accepts a proposed translation blindly. Check all the important words, as well as tenses and accords.

- Make full use of the alternatives provided for instance by DeepL. The proposed choice is statistical, but often the right or more accurate one is within the first 3-5 alternatives.

- Once a significant chunk of text is translated, re-read in its entirety to make the style more homogeneous and reduce repetitions. To be fair, this is not specific for MT, and should always be done.

- back-translate the text from the target to the source language, in order to spot possible ambiguities or mistranslations.

What do you think? Are you using Machine Translation at the moment? Which systems? How?